The world’s search engines are constantly evolving, and search robots tirelessly crawl the ever-growing Internet to seek out and index new content, they are deciphering content in a more human way.

In the past, optimising content for the search engines sometimes meant using methods to artificially manipulate the search results. This practice was known as black hat SEO.

Google and the other search engines want to deliver useful and relevant results; not those which are the result of extensive SEO. These days, the emphasis is on quality and originality and presenting value to human readers, but this is not to say that SEO is dead; but that it has simply evolved – and continues to do so.

Let’s take a look at 5 of the worst SEO mistakes you can make, both black hat methods that spectacularly contravene Google webmaster guidelines, and plain old fails!

1 – Incorrect Use of the Robots Tag

There will often be situations where you don’t actually want certain content to be indexed by the search engines, such as print-friendly versions of a page which otherwise contain the same (duplicate) content, or pages not useful for visitors arriving via Google such as the basket page on an ecommerce website.

However, incorrect use of the noindex meta robots tag can cause havoc for your SEO strategy, since using it for a particular page will completely hide it from all of the major search engines, even if other websites link to it.

If you find that certain pages on your website are not showing up in the search results, look out for the following line in the HTML code of the page in question:

<meta name="robots" content="noindex">

In some situations, the above line may be added accidentally by your content management system, in which case you should remove it if you want the content to be indexed.

However, the robots tag can be used incorrectly to an even more devastating effect. Not only can you use the tag within the markup on individual web pages, you can use it with your websites robots.txt file.

Below is an example of the disallow command being used to stop a single page being indexed, in this case the basket page.

User-agent: * Disallow: /basket

This is fairly common practice if you don’t want a site under construction to be indexed – but add the tag by accident and your entire website will vanish from Google quick as a flash!

Below is a quickfire way to disallow allow your entire website…

User-agent: * Disallow: /

2 – Buying Links

Buying links might seem like a quick and easy way to boost your link building strategy by getting links to your website placed in vast numbers all over the Internet.

However, link buying inevitably leads to your links ending up on hundreds, if not thousands, of irrelevant and low-quality websites. These links often on websites are a part of huge link farm networks – networks that Google are sure to locate eventually.

Such links are valueless at best and counterproductive at worst. Google partly judges the value of your website based on the quality and relevancy of the links that point to it making quality far more important than quantity.

Not only is buying links likely to hurt your standing in the search engines; it may also get your website de-indexed entirely since link buying is and always has been against Google’s terms of use.

Despite the pitfalls, it’s still remarkably easy to buy links online

Despite the pitfalls, it’s still remarkably easy to buy links online

3 – Keyword Stuffing

As you probably know already, a great deal of SEO revolves around the correct use of keywords. However, the search engines have become far more sophisticated in recent years at determining the subject matter of content without needing to rely on things like keyword prevalence.

While keywords are still important, provided that they are subtly placed and fit in naturally with the content, you should avoid paying any heed to the myth that is keyword density.

Paying too much attention to keyword density inevitably leads to keyword stuffing which is something that annoys both Google and your visitors.

Excessive use of keywords will end up being completely counterproductive, greatly reducing the quality of your content in the process.

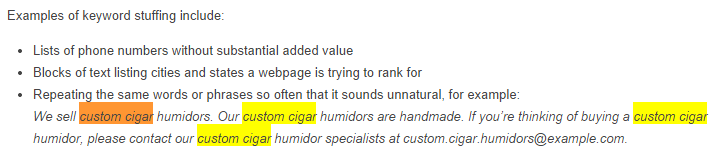

Google’s example of keyword stuffing

Google’s example of keyword stuffing

4 – Duplicate Content

Duplicated content is exactly what it sounds like, and sometimes it can be created accidentally while other times it may be done intentionally (stolen content!). However, duplicate content of any kind confuses the search engine crawlers, since they have trouble deciding which the original content is.

Google try to only ever index what it considers to be the original content; and as such tries not to display the same content twice or more in the search results.

If for some reason you must have duplicate content on your website, such as print-friendly versions of particular pages, be sure to use the noindex tag as mentioned previously, since this will tell most search engine crawlers to skip it.

5 – Cloaking

Sometimes, unscrupulous Internet marketers may use methods to display different content to the search engines to that which is displayed to human visitors, a process which is known as cloaking.

Cloaking may refer to creating hidden text containing keywords that are the same colour as the site background, or doorway pages containing keywords that automatically redirect visitors to the real page.

Such methods exist to artificially manipulate the search engines, and unsurprisingly, they are against Google’s webmaster guidelines. Any website which makes use of cloaking methods in the hope of boosting their standing with the search engines will likely find their site being removed from the search results sooner or later.

Conclusion

All of the above practices make Matt Cutts do this….

And there are plenty more bad SEO practices that could harm your website such as creating content which the search engines cannot read (Flash and Java etc.).

In conclusion, create your website for your human visitors. Focus on providing value and originality to organically encourage traffic and social media sharing, and much of your SEO will take care of itself. After all, a great website will likely impress the search engines as well.

Your Say!

Have you ever made any grave SEO mistakes? Or had any near misses? Let us know in the comments below!

It’s an oldie but goldie!