Google Webmaster Tools provides an invaluable set of features for any webmaster, including the ability to report crawl errors that the search engine robots encounter when visiting your site to analyse and index its content.

Frequently monitoring the health of your website by identifying and fixing potential crawl errors is one of the most important areas of modern search engine optimisation, and Webmaster Tools provides you with all of the information that you need for free.

[Tweet “What are the common types of Webmaster Tools crawl errors, and how can you fix them?”]You can find a list of crawl errors by logging into your Google account, opening Webmaster Tools, selecting your website and navigating to “Crawl > Crawl Errors” in the sidebar.

In this article, we’ll take a look at fixing some of the most common errors that could be harming your website’s ranking in the search results.

Server Connectivity Errors

If Google Webmaster Tools is reporting a server connectivity error, the search engine crawler has been completely unable to access your website.

Such problems are usually caused by a website being too slow to respond or by a configuration error that is blocking access.

If server connectivity problems occur only occasionally, then it will likely be due to your hosting provider being temporarily offline due to maintenance or an unforeseen technical problem.

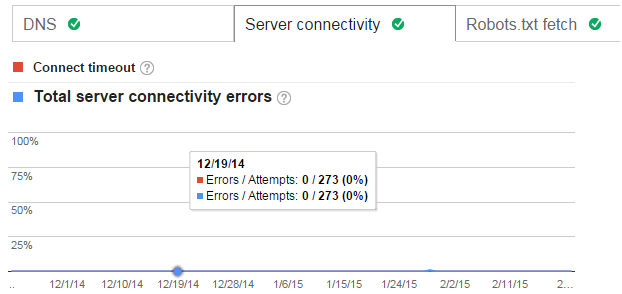

The Server Connectivity section in Webmaster Tools showing zero connectivity errors in the last 90 days

The Server Connectivity section in Webmaster Tools showing zero connectivity errors in the last 90 days

Server connectivity errors can also be caused by request timeouts, whereby a website takes so long to load that Google abandons it.

In the case of a dynamic website, such as one powered by WordPress or any other content management system (CMS), the problem could lie with the database configuration.

In such cases, you’ll need to contact your hosting provider and talk to them about removing any such restrictions on your Web server that may be blocking Google’s access.

DNS Errors

Similar to server connectivity errors, DNS errors are caused when Google cannot communicate with DNS server, or find your content on the server.

Once again, you’ll need to contact whoever handles your DNS records (likely to be your domain registrar) and your web hosting server to ensure access is allowed.

Robots.txt Issues

Robots.txt is a small text file stored in the root directory of your Web server that lists any URLs on your website that you do not want to give the search engine crawlers access to.

There are various reasons why you might not want certain pages to be indexed, and any pages restricted by the robots.txt file will be referred to in Google Webmaster Tools.

When Google’s crawler visits your website, it will try to find the robots.txt file before it does anything else.

If the file is present, but not reachable, you will see a robots.txt fetch error, in which case your hosting provider may be blocking Google’s crawler.

Not Found (404 Errors)

The infamous 404 error appears when a link leads to a webpage that cannot be found.

However, while a 404 URL error is meant to show that the page being linked to doesn’t exist, the problem is often due to an incorrect link or another technical issue.

If you are receiving a 404 error, you will need to redirect any offending links to an existing and relevant page on your site.

Google also recommends that you create a custom 404 error page complete with a list of alternative suggestions and a search box since this will be far more useful to your visitors than a generic 404 error page.

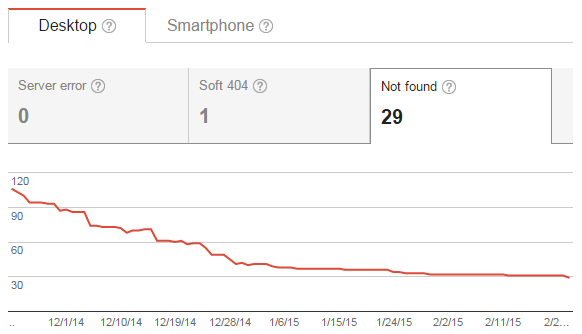

The 404 error section of Webmaster Tools. The site in question looks to have been working on reducing the amount of 404s over the last couple of months

There are many broken link checkers online that you can use to list any offending internal or external links on your website.

Soft 404

You may also see “Soft 404” errors. A soft 404 is when a page is not found and redirected, but Google do not believe the content on the destination page is relevant enough, or valuable.

You may want to consider redirecting these pages to another more relevant URL on your site.

Conclusion

For the most part, the crawl errors reported in Google Webmaster Tools are fairly intuitive, and while server-related errors are typically related to your hosting company, URL errors are often a result of broken links or inaccessible content.

On a final note, you can use the Fetch as Google feature within Webmaster Tools to view your website as Google’s crawlers see it, and this will allow you to see all of the content that is actually being indexed.